Blog

Should nonprofits be reporting evaluation findings to funders?

We have pointed out in previous blogs that evaluation is less likely to lead to learning or action when it is mandated by an external actor, such as a funder. In this blog, we are going to explore in more depth the relationship between accountability, evaluation, and learning.

-

What is the relationship between evaluation and accountability? What should it be?

-

Can we imagine a sector where nonprofits are held accountable for learning, development, and adherence to their mission? If so, what would that look like?

-

What role would evaluation play in that process?

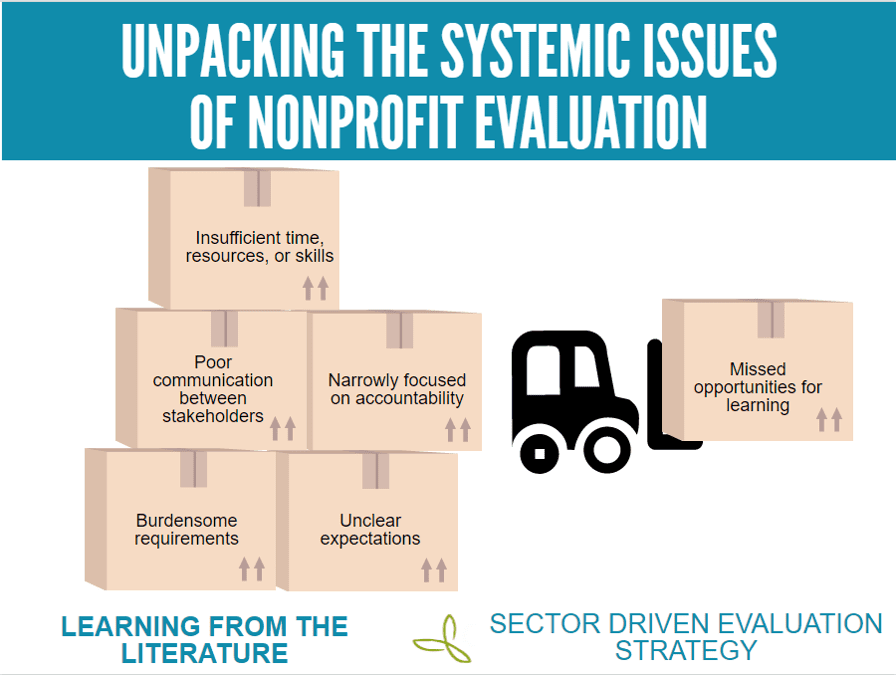

Nonprofits can be (and are) held accountable for many different things including how they spend money, how they are governed, the quality or type of service or activity they provide, and their adherence to regulations. Evaluation work typically comes into the mix as a way to hold nonprofits accountable for the outputs and impacts they achieve in their communities and how well these impacts align with key funder, government, or community priorities.

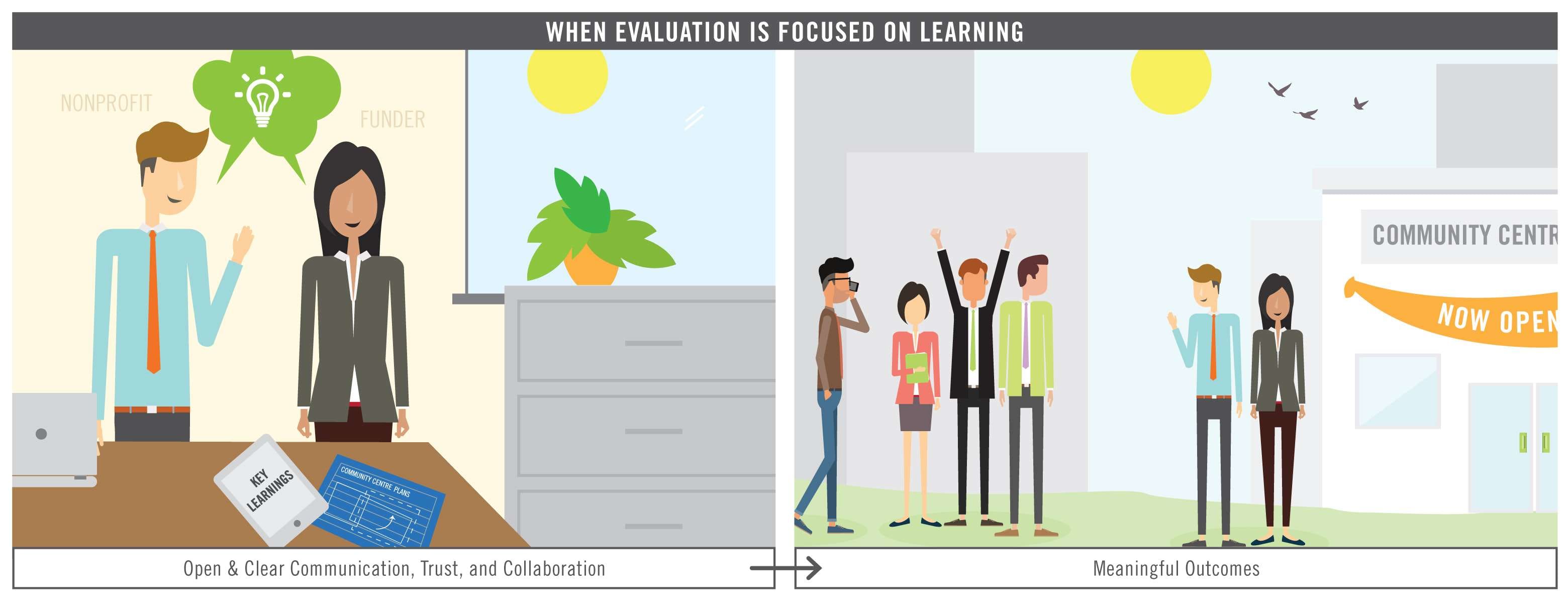

Alnoor Ebrahim suggests that it might be possible to hold nonprofits accountable for different kinds of things than we do now. Nonprofits could be accountable for how well they advance their mission, the degree to which they learn over time, and how well they adapt their approach in order to remain focused on the reasons why they exist. Lehn Benjamin goes a step further, suggesting that we ought to re-think the very definition of the term accountability. He suggests that accountability relationships need not necessarily be set up to “direct and control the actions of other agents.” They could, instead, “serve as vehicles for discussion and greater understanding between the principal and agent about the problem at hand.”

What if funders saw themselves as one stakeholder in a networked approach to accountability?

At present, funders often set up accountability requirements in isolation. They ask nonprofits to send them many different kinds of information and rely heavily on the content of those reports to make their judgements about the success of grants. They rarely take into account the fact that nonprofits are accountable, in different ways, to many other audiences (other funders, their boards, and the people they work with and serve).

Can we envision a different approach? What if accountability to individual funders was focused on basic operational things like money spent and clients served? The process of critical reflection and learning might be removed from the one-on-one communication between a grant recipient and a single funder. Responsibility for setting the outcome evaluation agenda, synthesizing findings, and demonstrating impact could be handled in a more collaborative and independent way. It could, for example, be uploaded to collaborative planning tables (such as poverty round tables) or collective impact backbone organizations (Born, 2016). The role of managing and supporting measurement of impact could also be played by provincial umbrella groups or nonprofits focused on capacity building, planning, and research. Nonprofits would then share their evidence of impact through a network of partners that functioned like a community of practice built on reciprocal accountability.

This approach would have a number of advantages. It might, in fact, do a better job of providing funders with strong evidence of the impact of their investments than the accountability mechanisms we use now. Funders are often members of collaborative planning tables and networks. Consequently, they could help set the evaluation and learning agenda and participate in the process of critical reflection.

Funders could provide financial support for design, training, data analysis, and knowledge mobilization. This in turn may enable funders to make use of the findings in demonstrating their own impact and making strategic investment decisions.

Perhaps the findings that emerge from this process would be more honest, more interesting, and more useful than the information gleaned from annual grant reports.

Suggested further reading

Benjamin, L. (2008). Evaluator’s role in accountability relationships: Measurement technician, capacity building or risk manager? Evaluation, 14 (3), 323-343.

Born, P. (2016). Champions for Change: Leading a backbone organization for collective impact. Paper presented at The Tamarack Champions for Change Forum, Halifax.

Ebrahim, Alnoor (2010). The Many Faces of Nonprofit Accountability: Working Paper. Harvard Business School.

Harwood, R. and Creighton, J. (2009). The organization First Approach: How Programs Crowd Out Community. Bethesda: The Harwood Institute.