Blog

Whaddaya mean, “evaluation?” — Mismatched expectations in nonprofit measurement – Archived Content

In Ontario’s nonprofit sector, evaluation is a word that gets used a lot. Different kinds of data gathering approaches with different purposes sometimes get lumped together under the general heading of evaluation. This can lead to miscommunication and unrealistic expectations. To try to clear things up a bit, we have created this resource (Editor’s note: We’ve updated this resource. Click here to see Version 2.0). For the purposes of this discussion, we would suggest that people in the sector use the term evaluation to describe four basic approaches to measurement work. We know the reality is a bit more complicated than that, but allow us to elaborate further.

Here are four definitions that we will be using:

1. Performance measurement is the day-to-day tracking of simple descriptive information.

2. Program evaluation tends to be more intensive, time-limited, and focused on measurement of short term outcomes.

3. Applied research tends to be more theory driven and designed to generate new knowledge from which we can make general conclusions rather than practical recommendations for program managers.

4. Systems evaluation focuses on understanding the cumulative effect of multiple programs or strategies.

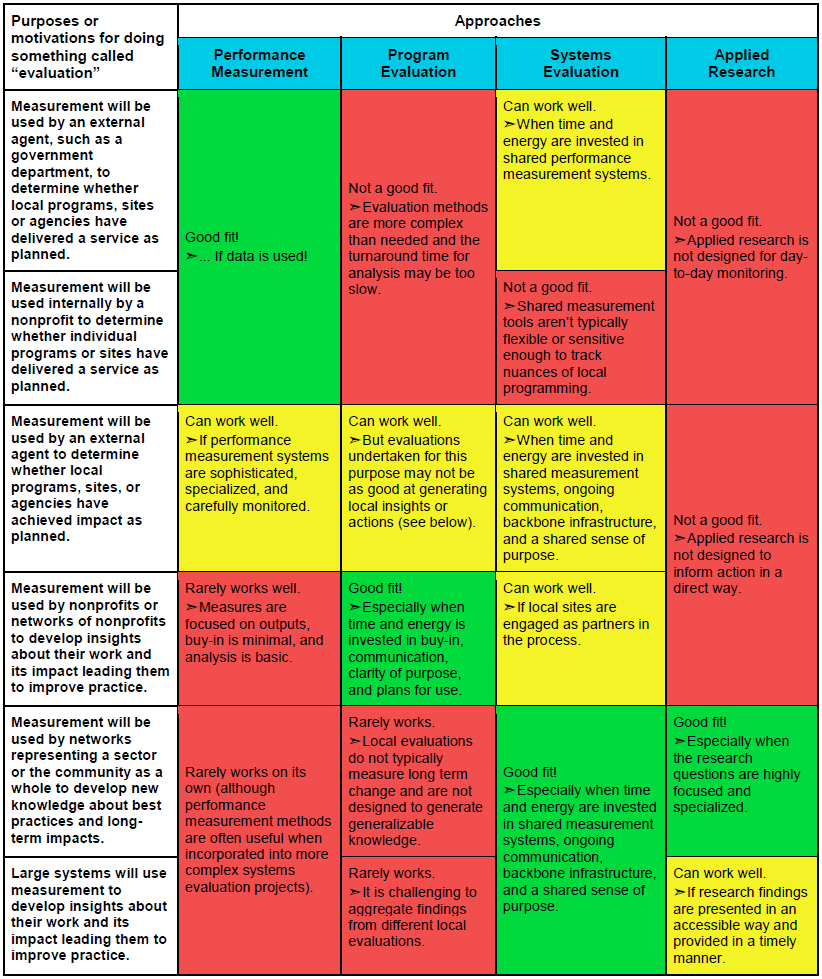

At first glance, it may seem like we are splitting hairs here. However, we think these four approaches are different from one another in really important ways. Check out our table that lists six common reasons why nonprofits engage in evaluation and then indicates which of the four evaluation approaches works best for each.

In the table, the green cells represent situations where the approach and expectations are well matched. Let’s say, for example, your program collects basic data about how many people take part, who those people are, and how satisfied they are with your service in a general way. This is a classic example of a good performance measurement approach. If your purpose in gathering this data is to demonstrate to a funder that you are carrying out the program as planned and that things are running smoothly, this approach works really well. You’re in the green cell in the top-left of our table.

On the other hand, maybe you are doing much more ambitious measurement work. Perhaps you are working with sister agencies in other cities and an academic partner on a study of the long-term impacts of a certain program model. If so, you’re probably down in the bottom-right section of our table, in another green cell. If so, you’re good to go! The applied research approach is probably right for you.

So far, so good?

However, there are many situations where the approach and expectation don’t match as well. Imagine a nonprofit with a strong track record of day-to-day performance measurement. Let’s say this nonprofit starts to shift its thinking about the purpose of its measurement work. Perhaps, for example, it would like to get better at measuring program outcomes. It might be tempting to think that this would be an easy shift. A few tweaks to the client survey and off we go. However, this expectation may not be realistic. Getting good at outcome measurement may require the agency to develop or do more thinking about its theory of change. It may need to begin doing pre-test surveys as well as post-tests. It may need to start asking somewhat more intrusive questions of its clients. In short, a change in evaluation purpose or expectations may require a significant change in evaluation approach. In our table, using performance measurement approaches to demonstrate achievement of impact appears in a yellow cell. That means it can work, under the right circumstances, but you should proceed with caution. Shifting to a program evaluation approach (the green cell in the middle of the table) might be a better way to go.

Funders in the nonprofit sector can also run into challenges around mismatched expectations. Imagine a funder that has asked all grant recipients to engage in program evaluation work and report on the outcomes. Let’s assume that the grant recipients have done this measurement work well. That funder may believe that these individual program evaluation reports can be rolled up to demonstrate system impact or the impact of the funder’s investments on the community as a whole. This may not be a realistic expectation, and it shows up in our table as a red cell. Unless the funder and its grant recipients have worked together to create a measurement strategy specifically designed to demonstrate system impact (as the Canadian Women’s Foundation does, for example) lots of individual evaluation reports aren’t likely to add up to evidence of systems change.

If you’d like to know more about evaluation definitions and approaches, there’s an app for that. However, the core point we are making is that no single evaluation project can be all things to all people. It is important to think about whether your approach matches your expectations and whether everyone involved in your evaluation work has similar expectations.

[button url=”https://cdn.theonn.ca/wp-content/uploads/2017/02/Evaluation-Approaches-Resource-2.0.pdf” target=”_blank” color=”green” size=”large” border=”false” icon=””]Check out our updated version 2.0[/button]

[button url=”https://cdn.theonn.ca/wp-content/uploads/2016/06/Evaluation-Approaches-Resource.pdf” target=”_blank” color=”skyblue” size=”medium” border=”false” icon=””]Check out our original resource for more Info[/button]

[line]